Web scraping with Node NICAR12

slides

http://j.mp/node-nicar

code

http://j.mp/node-nicar-js

me

@A_L

JavaScript running locally.

That means you get the fileystem, HTTP

and jQuery.

You know this!

$("h2").each(function() {

console.log($(this).html())

});

Set up the room.

brew install node

curl http://npmjs.org/install.sh | sh

npm install -g jsdom

npm install -g request

add node & modules path to your .profile or .bashrc

export NODE_PATH="/usr/local/lib/node:/usr/local/lib/node_modules"

var fs = require('fs');

var request = require('request');

var jsdom = require('jsdom').jsdom;

Get a web page.

var get = function(url, cb) {

request(url, function (error, response, body) {

if (!error && response.statusCode == 200) {

cb(body);

}

});

};

Get a (jQueryified) DOM!

var createDocument = function(html, cb) {

var document = jsdom(html);

var window = document.createWindow();

jsdom.jQueryify(window, cb);

};

Scrape.

var scrape = function(window) {

// do stuff with your window

};

So far:

get(url, function(body) {

createDocument(body, function(window) {

// do stuff with your window

});

});

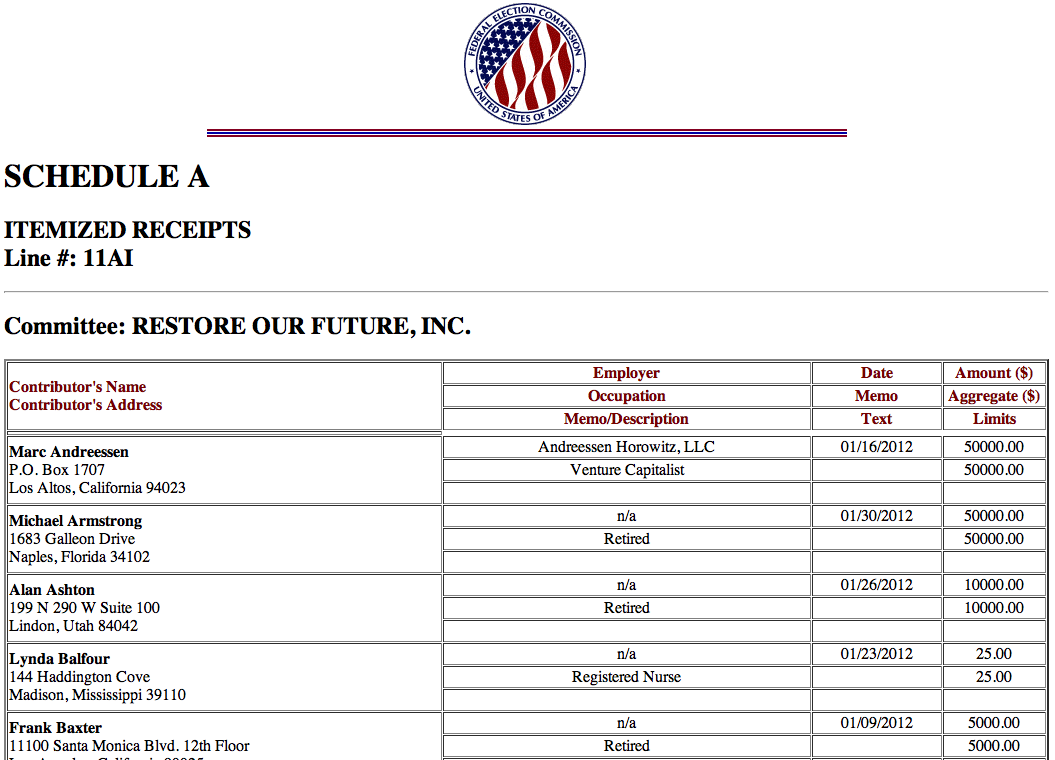

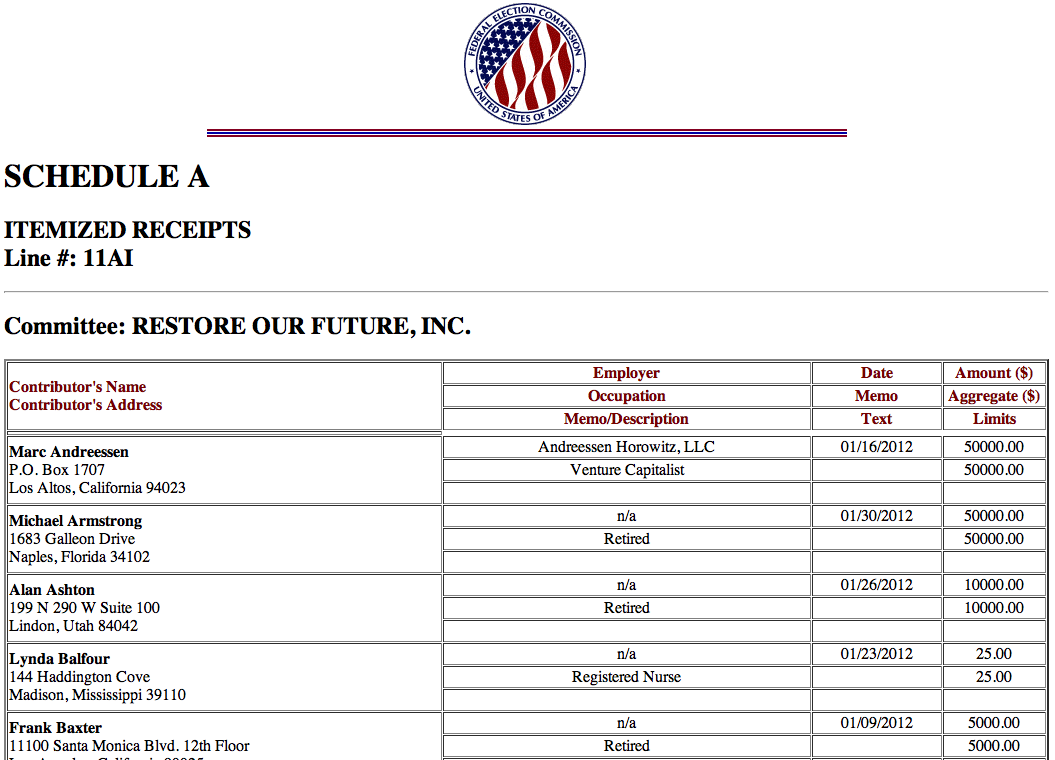

FEC.gov

var url = "http://query.nictusa.com/cgi-bin/dcdev" +

"/forms/C00490045/766953/sa/11AI";

get(url, function(body) {

createDocument(body, function(window) {

// your scraper

var $ = window.$;

var json = [];

var donors = $("table tr td[align=LEFT]");

donors.each(function() {

var name = $($(this).html().split("<br>")[0]).text();

json.push(name);

});

});

});

#=> Marc Andreessen

Michael Armstrong

Alan Ashton

Lynda Balfour

...

Save it as JSON.

jsonStr = JSON.stringify(json);

fs.writeFileSync("donors.json", jsonStr);

["Marc Andreessen","Michael Armstrong","Alan Ashton",

"Lynda Balfour","Frank Baxter","Glen Beck",

"James Berardinelli","Andrew Bernstein",

"Robert D Beyer","Lynn Booth","David Bradford",

"James Brown","Frank Bruno","Buena Vista Investments LLC",...]

Thank you.

Al Shaw NICAR 2012 @A_L